Some people are made uneasy by how integer types in many programming languages can also be treated as bitfields (so, for example, you can XOR ![]() two of them).

two of them).

Some even regard it with a kind of moral disgust. What's up with that?

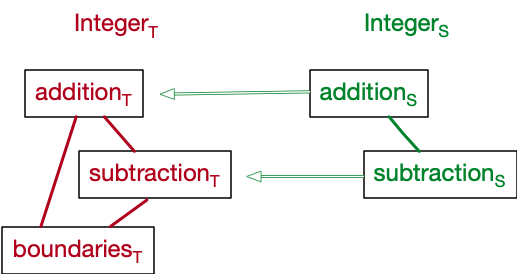

Integer as a Metaphor drew this picture of the mapping between a computer integer and the mathematical idea:

(Double-click to enlarge)

Bitfields don't fit into this Metaphor System. Reasoning about this different use of *the same object* (an integer-typed variable, say) has to be done by switching away from this metaphor – perhaps to a metaphor for bitfields, perhaps to something else.

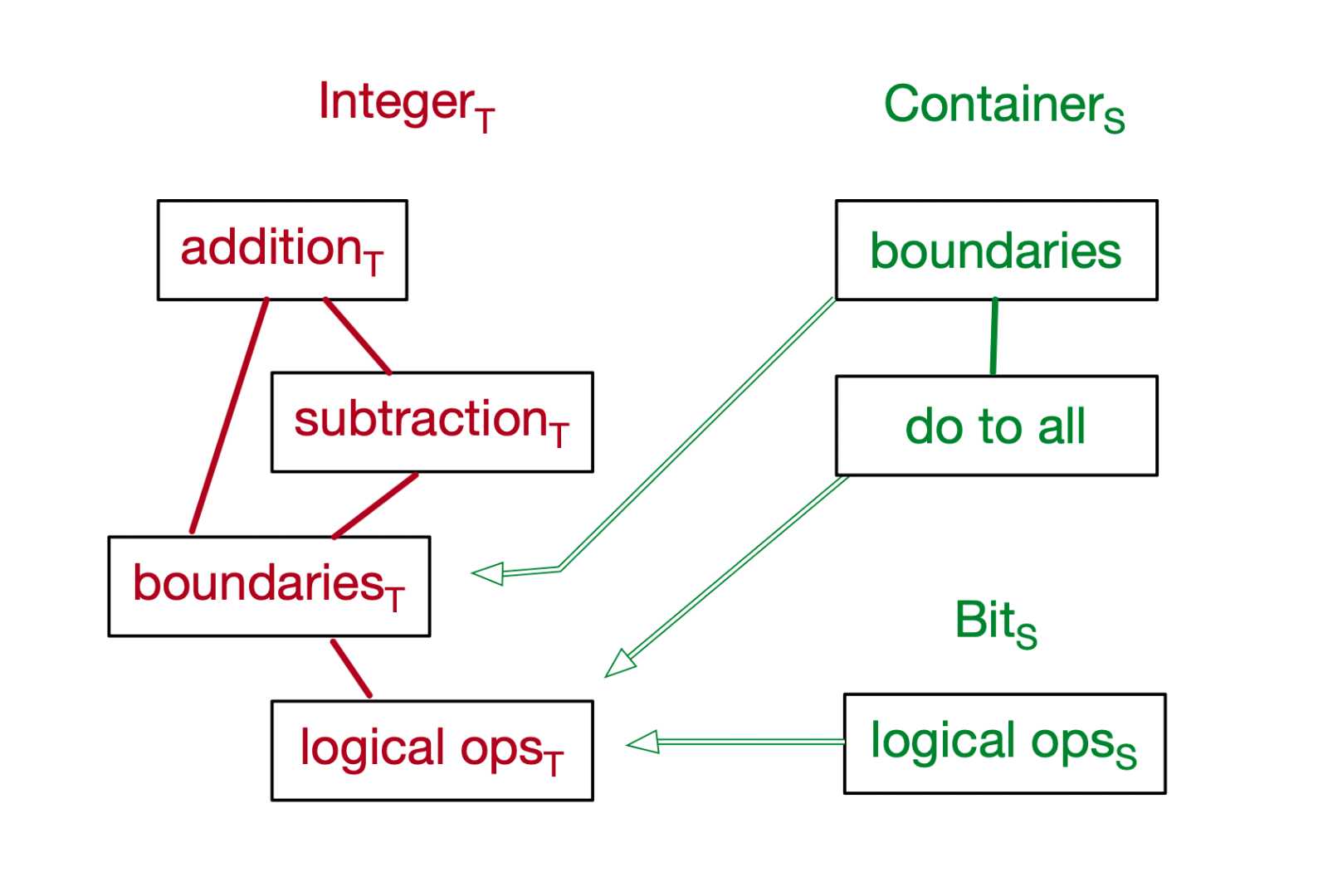

Let's suppose it's a metaphor. I imagine something like this:

We're combining our understanding of how binary digits work (simple boolean logic) with the idea of an integer as a container for a bunch of bits that you can operate on as a unit.

Thinking differently

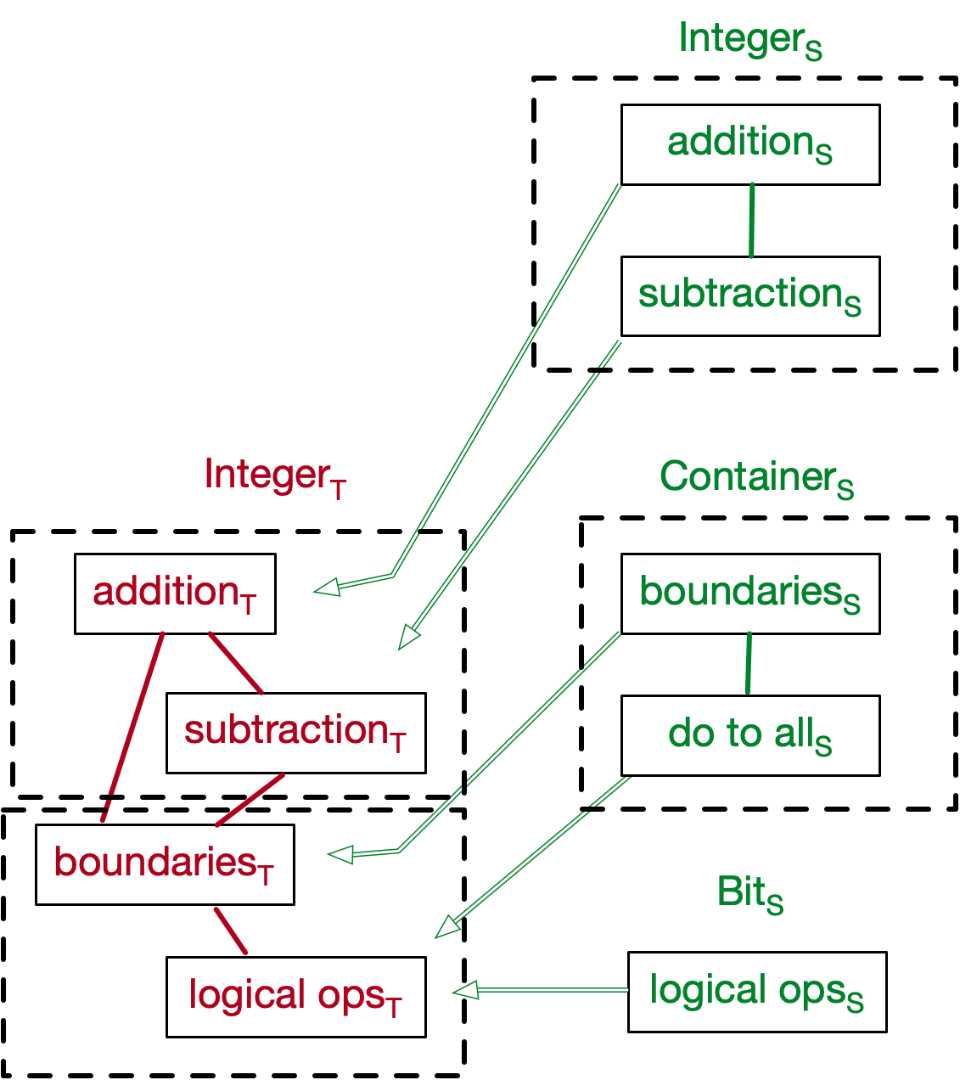

The problem here is that the target concept of Integer is mapped to two different metaphor systems. Worse, these systems are disjoint in both the source and target domains:

Disjointness

According to *Metaphors We Live By*, it's not unusual for more than one metaphor to be in play at the time of understanding or problem-solving. However, they don't think any two Metaphor Systems can be used together. See Coherent Metaphors.

The two metaphors illustrated here don't seem to have much of a sense of coherence, so I can see why that would bother some people.